Ranking Nodes in Temporal Graphs

QNode ranking in temporal networks are often impacted by heterogeneous context from node content, temporal, and structural di- mensions. This project introduces TGNet, a deep learning framework for node ranking in heterogeneous temporal graphs. TGNet utilizes a variant of Recurrent Neural Network to adapt context evolution and extract context features for nodes. It incorporates a novel influence network to dynamically estimate temporal and structural influence among nodes over time. To cope with label sparsity, it integrates graph smoothness constraints as a weak form of supervision. We show that the application of TGNet is feasible for large-scale networks by developing efficient learning and inference algorithms with optimization techniques. Using real-life data, we experimentally verify the effectiveness and efficiency of TGNet techniques. We also show that TGNet yields intuitive explanations for applications such as alert detection and academic impact rank- ing, as verified by our case study.

Why Ranking Nodes in Temporal Graphs is Different From in Static Graphs?

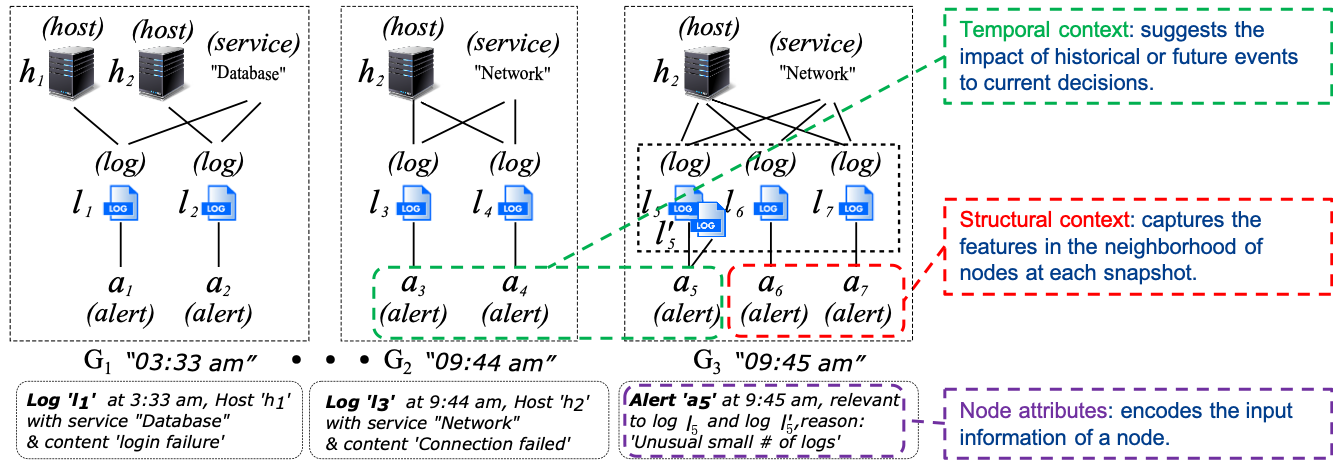

Temporal graphs have been widely applied to model dynamic networks. A temporal graph is a sequence of graph snapshots, where each snapshot (G,t) encodes a graph G occurs at an associated timestamp t. Emerging applications call for efficient predictive models that can effectively suggest and maintain the nodes with high priority in dynamic networks. The need for such models is evident in causal analysis, anomaly and attack detection, and link prediction in social networks.

Learning to rank node in static graphs has been studied. A common practice in prior work is to make use of latent features from graph structures to learn ranking functions. Learning node ranks in dynamic networks are nevertheless more involved than its counterpart in static networks. In particular, the node ranks can be influenced by rich context from heterogeneous node information, network structures, and temporal perspectives.

While desirable, learning to rank nodes in temporal graphs is more challenging than its counterpart over static graphs.

- Context learning. Context features of a node are formed by cer- tain activities related to this node. Such features offer us impor- tant sources of information to differentiate the roles that different nodes play in node ranking. In temporal graphs, context features are characterized from both structural and temporal dimensions.

- Context dynamics. Context features of a node bear constant changes over time. Such dynamics require effective and expressive models that can capture context evolution over time.

- Label sparsity. Labels provided by users are typically sparse and cover a small fraction of nodes. For example, system admins and anti-malware software may only provide a few ranked examples over months of data with thousands of alerts. This calls for effective learning that can be generalized from sparsely labeled data.

- Time cost. Efficient algorithms should be developed to support fast learning and ranking upon the arrival of new nodes. This requires effective optimization and provable performance guarantees for both learning and inference cost.

Overview of TGNet Model.

Reduced summaries can be used in knowledge search, query suggestion and fact checking in knowledge graphs.

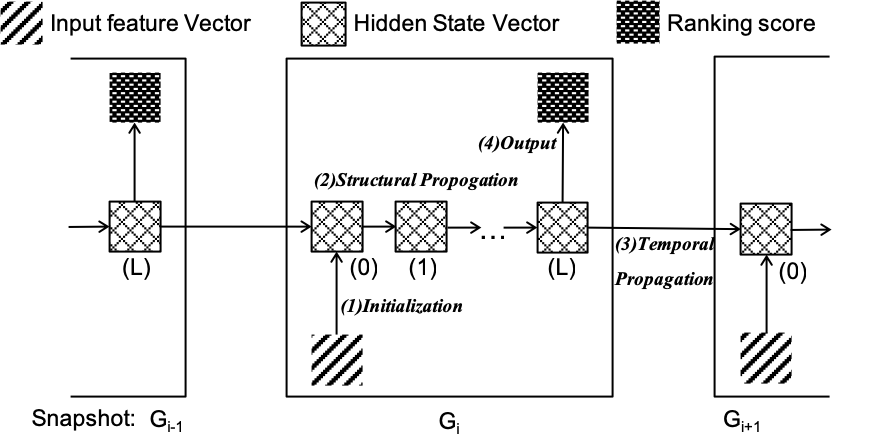

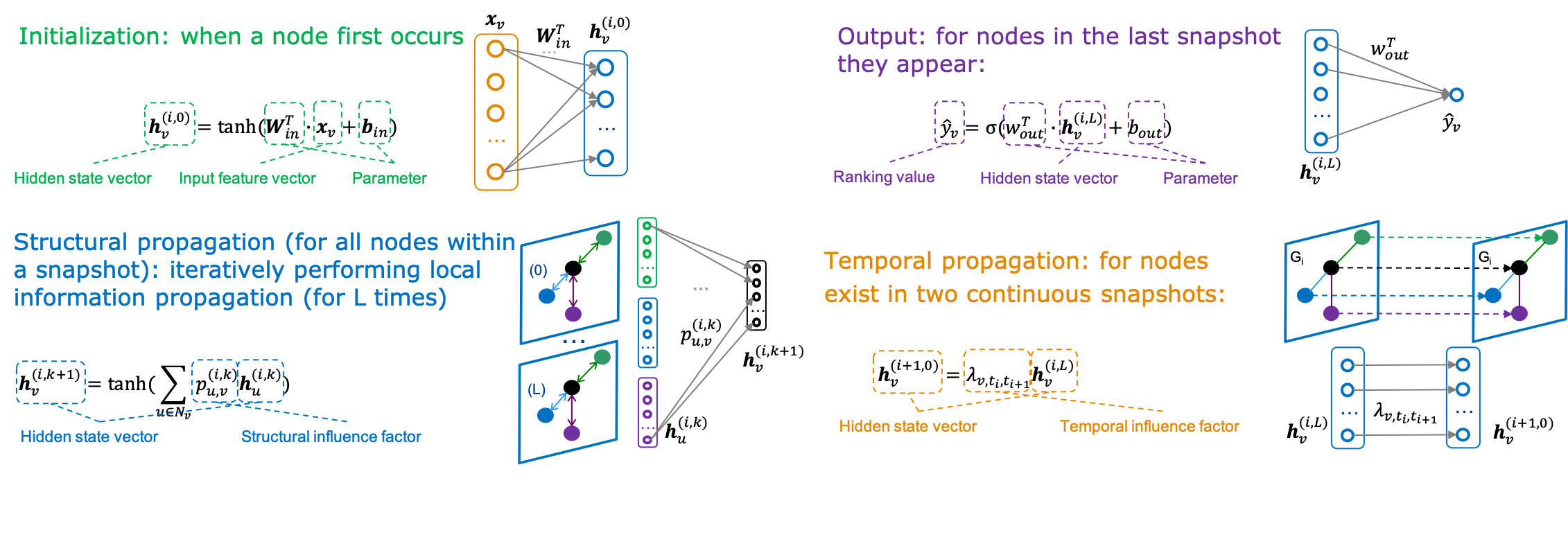

- Initialization layer: projects input feature vector into hidden state space;

- Structural propagation layer: exchanges neighborhood information in a snapshot;

- Temporal propagation layer: propagates temporal influence between snapshots;

- Output layer: transforms hidden states to ranking scores.

Make the Model More Efficient?

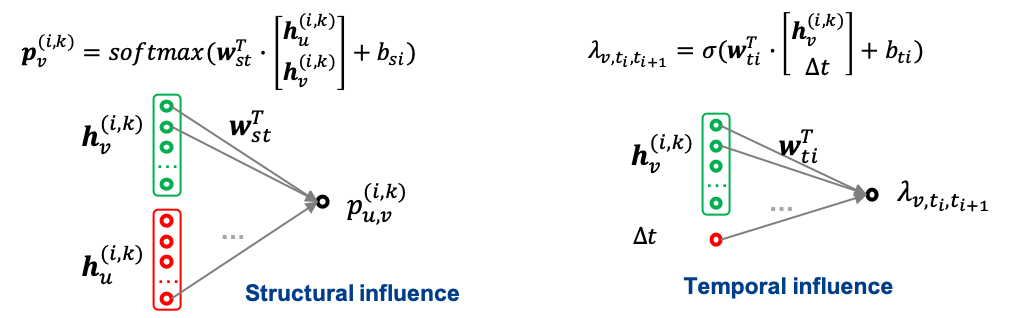

We introduce influence network, a novel network layer utilized by TGNet to cope with context dynamics. Unlike conventional methods that adopt fixed pairwise influence influence network layer takes updated node context features as input, and dynamically estimates the amount of temporal and structural influence among the nodes.

“Node-centric” vs. “Edge-centric”. Conventional methods adopts “edge-centric” influence model, where the parameters are associated to edges. Nevertheless, the assumption of fixed edge influence is hard to hold for context changes. Moreover, it is daunting for users to choose edge types with expected generalization power for unknown testing data. In contrast, TGNet adopts an influence network layer, denoted as InfNet, which uses a “node-centric” approach to model influence. The layer InfNet is devised based on two intuitions: (1) The influence between two nodes is conditioned by their contexts; and (2) The node context is determined by its hidden state.

Training the Model

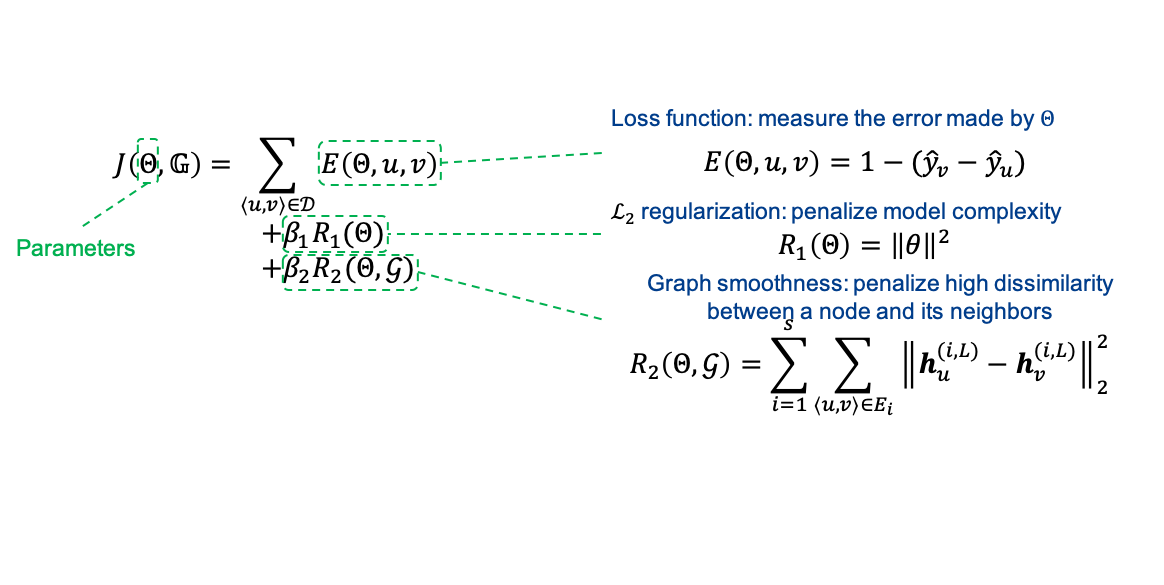

Given labeled data, we introduce an end-to-end training algorithm that jointly learns the model parameters.

Datasets

We conducted experiments using four real-world datasets.

- System Log Dataset (SLD) is a private system log dataset from NEC labs, including 30 days’ system logs generated by a cluster of 16 hosts. Using log analysis tool NGLA, we obtained a remporal graph and we focus on ranking alert nodes.

- ISCX Intrusion detection data (IDS) is a network intrusion dataset. In this dataset, we are interested in packet node ranking: the higher one packet node is ranked, the more likely it indicates an attack.

- Microsoft academic graph (MAG) is an academic network. We sampled 100K author ranking pairs based on their H -index value, and focus on learning to rank author nodes.

- Amazon product co-purchasing network (APC) is a network describing the the product information and related reviews. We focus on learning to rank the salesrank value for each product and sampled 100k product pairs.

| Datasets | SLD | IDS | MAG | APC |

| # of nodes | 61.4K | 5.7M | 2.5M | 2.1M |

| # of edges | 258.1K | 11.5M | 16.2M | 9.5M |

| # of snapshots | 19.4K | 66.7K | 12K | 1.5K |

| # of training node pairs | 20K | 100K | 100K | 100K |

Publications

- TGNet: Learning to Rank Nodes in Temporal Graphs. [Paper][Slide]

ACM International Conference on Information and Knowledge Management(CIKM), 2018.

Qi Song, Bo Zong, Yinghui Wu, Lu-An Tang, Hui Zhang, Guofei Jiang and Haifeng Chen

Acknowledgements

This project was done when Qi was an intern at NEC Labs America and is supported in part by NSF IIS-1633629.